hi,近期尝试使用 megengine的int4量化方案,我参考网上的example,

https://github.com/MegEngine/examples/tree/main/int4_resnet50_test

但是发现代码中给的dump.py文件没有进行quantize(model)。

另外请教一下int4模型是否只能在 Ampere下使用,3090能否生成对应的int4模型

感谢。

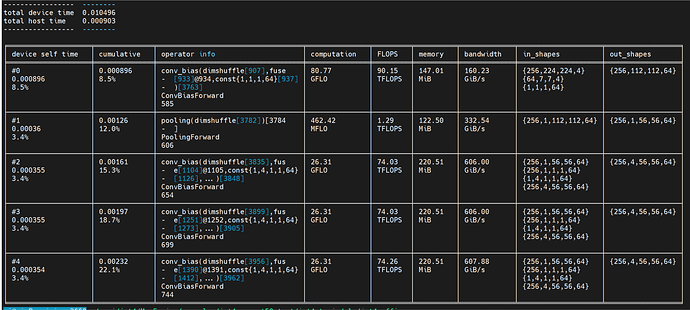

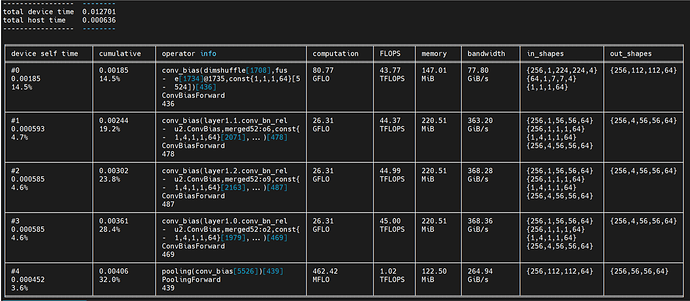

megengine int4模型

看了下dump.py中确实没有用quantize(model),那么dump出来的应该是qat模型,看test_int4.py里输入的也是float32,如果你有需要可以用quantize(model) dump出来quantized模型。

int4模型可以在Ampere以及高于Ampere的架构上使用,3090 就是 Ampere架构。使用cuda11以上的版本就行。

我尝试dump int4的计算图,但是失败了,能麻烦帮忙看一下么?

RuntimeError: no usable cuda convbias fwd algorithm

yes,我尝试这样做,但是失败了,麻烦能帮忙看一下么?

最终挂在:RuntimeError: no usable cuda convbias fwd algorithm

可以给一个完整的 backtrace 看一下吗

Traceback (most recent call last):

File “dump.py”, line 84, in

main()

File “dump.py”, line 44, in main

worker(args)

File “dump.py”, line 78, in worker

output = infer_func(data, model=model)

File “/home/mi/.local/lib/python3.8/site-packages/megengine/jit/tracing.py”, line 211, in call

self._trace.exit()

RuntimeError: no usable cuda convbias fwd algorithm

backtrace:

/home/mi/.local/lib/python3.8/site-packages/megengine/core/lib/libmegengine_shared.so(_ZN3mgb13MegBrainErrorC1ERKSs+0x4a) [0x7fc15459f63a]

/home/mi/.local/lib/python3.8/site-packages/megengine/core/lib/libmegengine_shared.so(+0x2a9d4e7) [0x7fc1546014e7]

/home/mi/.local/lib/python3.8/site-packages/megengine/core/lib/libmegengine_shared.so(_ZN6megdnn12ErrorHandler15on_megdnn_errorERKSs+0x14) [0x7fc157d6c8b4]

/home/mi/.local/lib/python3.8/site-packages/megengine/core/lib/libmegengine_shared.so(ZN6megdnn24get_algo_match_attributeINS_4cuda19ConvBiasForwardImplEEEPNT_9AlgorithmERKSt6vectorIPNS3_8AlgoBaseESaIS8_EERKNS7_8SizeArgsEmPKcRKNS_6detail9Algorithm9AttributeESM+0x229) [0x7fc15a116569]

/home/mi/.local/lib/python3.8/site-packages/megengine/core/lib/libmegengine_shared.so(ZN6megdnn4cuda19ConvBiasForwardImpl23get_algorithm_heuristicERKNS_12TensorLayoutES4_S4_S4_S4_mRKNS_6detail9Algorithm9AttributeES9+0x10fd) [0x7fc15a117abd]

/home/mi/.local/lib/python3.8/site-packages/megengine/core/lib/libmegengine_shared.so(_ZNK3mgb4rdnn11AlgoChooserIN6megdnn15ConvBiasForwardEE17AlgoChooserHelper19choose_by_heuristicERKNS2_5param15ExecutionPolicy8StrategyE+0x40e) [0x7fc154945b0e]

/home/mi/.local/lib/python3.8/site-packages/megengine/core/lib/libmegengine_shared.so(_ZN3mgb4rdnn11AlgoChooserIN6megdnn15ConvBiasForwardEE10get_policyERKNS4_17AlgoChooserHelperE+0x1d0) [0x7fc1549db3b0]

/home/mi/.local/lib/python3.8/site-packages/megengine/core/lib/libmegengine_shared.so(_ZN3mgb3opr11AlgoChooserIN6megdnn15ConvBiasForwardEE10setup_algoERKSt5arrayINS2_12TensorLayoutELm5EEPS3_PKNS0_15ConvBiasForwardEb+0x7a9) [0x7fc15487d2b9]

/home/mi/.local/lib/python3.8/site-packages/megengine/core/lib/libmegengine_shared.so(ZNK3mgb3opr15ConvBiasForward24get_workspace_size_bytesERKN6megdnn11SmallVectorINS2_11TensorShapeELj4EEES7+0x6db) [0x7fc1547a351b]

/home/mi/.local/lib/python3.8/site-packages/megengine/core/lib/libmegengine_shared.so(+0x2cc2580) [0x7fc154826580]